FileMaker Pro Audit Trail “Revisited”

By Doug West

**Please visit our updated Filemaker Pro Audit Trail Refined page.

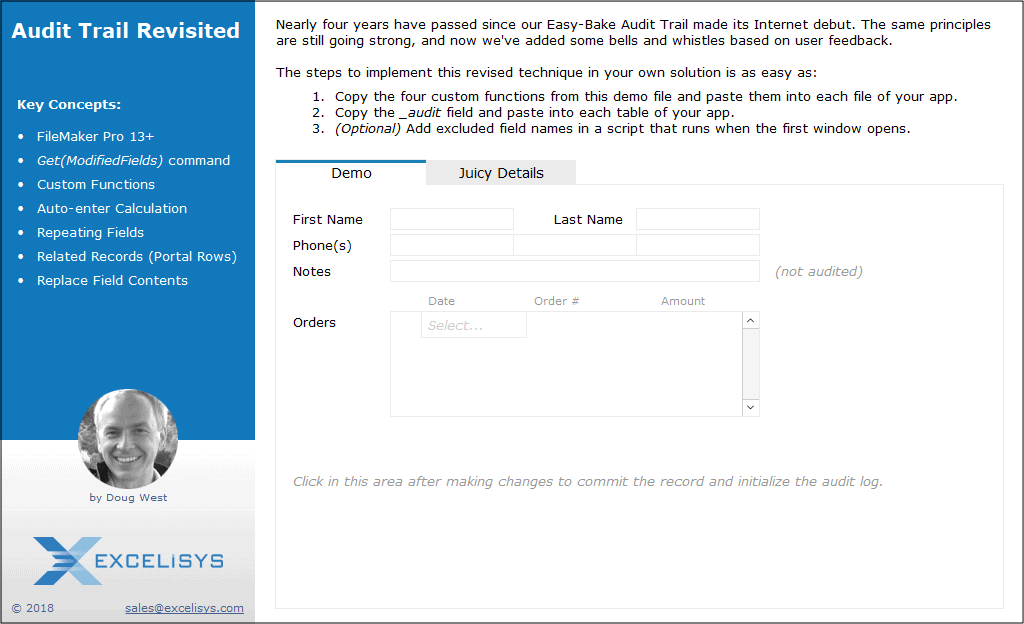

Nearly four years have passed since our last Easy-Bake FileMaker Pro Audit Trail made its Internet debut. The same principles are still going strong, and now we’ve added some bells and whistles based on user feedback.

The steps to implement this revised FileMaker Pro audit trail technique in your own solution is as easy as:

- Copy the four custom functions from this demo file and paste them into each file of your app.

- Copy the _audit field and paste into each table of your app.

- (Optional) Add excluded field names in a script that runs when the first window opens.

Our primary goals in revisiting this FileMaker audit trail technique were to:

- Remove the need for the New Record script included in the prior tip file

- Audit all repetitions of repeating fields

- Capture changes made through the use of the Replace Field Contents command

The first of these goals provides two benefits. Besides the direct effects of the simplification and reduced effort to implement, we also gained support for auditing changes in portal rows without scripting.

Performance is always a concern when it comes to background processing and we’ve done what we could to minimize the overhead. The impact may not be noticed when editing a single record. However, when using the Replace Field Contents command, the performance impact can quickly become apparent with even relatively small found sets. The Get(ModifiedFields) function, unfortunately, doesn’t report the field modified with a Replace. Instead, we’re forced to evaluate every field on the record and compare the current field contents to the latest corresponding value in the audit history. If a future version of FileMaker Pro provides us with this useful detail, performance will improve automatically without modifying these custom functions.

Of course, this type of audit log stores the history on the same record as the current values. So what happens to the history when you delete the record? The history gets deleted along with it. A variety of solutions have been proposed to address this situation. If you’re concerned about this dilemma, why not simply capture the history to a background table through a scripted process before deleting the record?

The _audit field in this technique is also no longer dependent on a modification timestamp field in each table. If you know of an easier way to add an audit log to a FileMaker solution, we’d love to hear about it.

Enjoy using this revised version of the FileMaker Pro audit trail technique!

•• Download AuditTrailRevisited.zip (click to visit the new version)••

**This article is provided for free and as-is, use, enjoy, learn, and eXperiment at your own risk – but have fun! eXcelisys does not offer any free support or free assistance with any of the contents of this blog post. If you would like help or assistance, please consider retaining eXcelisys’ FileMaker Pro consulting & development services.

About eXcelisys, Inc. : Founded in 2001, eXcelisys (www.excelisys.com) was an FBA Platinum Partner from 2014-2018, FBA member from 2002 – 2020 and still a FileMaker Certified developer organization since version 7. eXcelisys specializes in designing, developing, customizing, supporting, consulting, migrating, upgrading, fixing, and integrating of database solutions for Desktop, Mobile, and Web applications. Our core technology competencies are FileMaker Pro and MySQL for database frameworks, along with FileMaker WebDirect, WordPress, MySQL, PHP, CodeIgniter, PostgreSQL, CSS, HTML5, and Javascript for web sites and web applications. Aside from providing eXcellent customer service, our goals are to use these technologies to intuitively automate your organization’s data solution needs seamlessly and flawlessly across the web, mobile, and desktop platforms. Contact eXcelisys today for a free estimate and consultation about making your business more efficient through intuitive and effective software automation. 866-592-9235.

eXcelisys, Inc. is an independent entity and this web site/information/blog post has not been authorized, sponsored, or otherwise affiliated with Claris, Inc. FileMaker is a trademark of Claris, Inc., registered in the U.S. and other countries.

![[FMP Tip-n-Trick] Integrating A.I. with FileMaker for Marketing Research](https://excelisys.com/wp-content/uploads/2025/08/ai-research-assistant-capture-500x383.png)

![[FMP Tip-n-Trick] FileMaker Pro Audit Trail “Refined”](https://excelisys.com/wp-content/uploads/2025/03/fmp-audit-trail-refined-500x383.webp)

Doug that is fantastic… I am looking at this and trying to determine if it is possible to tweak the CF to actually output the audit log in to a JSON array?

{

[

{

“acct” : “Stephen Dolenski”,

“field” : “test”,

“from” : “1”,

“to” : “0”,

“ts” : “4/27/2018 3:35:19 PM”

},

{

“acct” : “John Doe”,

“field” : “test”,

“from” : null,

“to” : “1”,

“ts” : “4/27/2018 3:34:10 PM”

}

]

}

Yes, it’s absolutely possible to modify the eX_Audit custom function to output a JSON result. That would be done in these two lines of the calculation:

Get ( CurrentHostTimestamp ) & “: ” & Get ( AccountName ) & ” changed ˂” & ~thisFieldName & “˃” & // Using “arrowhead” characters

Case ( not ~isContainer ; ” from ” & Quote ( $prevValue ) & ” to ” & Quote ( $thisFieldValue ) )

Since we’re just stringing text together, we could string the text together in the JSON tagging format or use the JSONSetElement() function introduced in FileMaker 16. I considered the same idea myself while developing this round of changes. However, if you’re not planning to parse the data into a records in a separate table, the JSON structure doesn’t seem to provide much of an advantage when trying to find the most recent revision to a specific field.

Doug, this is brilliant! I dropped this into a hosted file of about 23,000 records in the table I tried it on. Got about a 5 minute delay in regaining control. Dialog said updating…

Looks like it updates mods going back aways. After that, worked perfectly. Needed an audit log this brilliant anlight weight forever. Thank you so much! The developer community is always so giving.

You’re welcome! I’m glad you were able to put it to good use.

Doug, thank you so much for this exemplary audit trail and your generous donation to the community.

In the project I am developing, which is a Melbourne University social enterprise research project, I am looking to write the details of the audit (timestamp, username, original value, modified value) into seperate fields. I’m sure by analysing your solution further, I could figure it out, or find a less efficient way of parsing the data that is being generated by the _audit auto enter field. Do you have any ideas or pointers on where I could look to doing this? In some of the custom functions that exist? Or perhaps by creating multiple auto-enter _audit fields?

Perhaps I should learn how to wield more of the Execute SQL function… Any suggestions or pointers would be greatly appreciated.

We will be listing your company in our list of sponsors and supporters on our research project website. Cheers.

The eX_Audit custom function writes the new information to the audit/changelog history field. These two lines of the calculation are where you would want to start:

Get ( CurrentHostTimestamp ) & “: ” & Get ( AccountName ) & ” changed ˂” & ~thisFieldName & “˃” & // Using “arrowhead” characters

Case ( not ~isContainer ; ” from ” & Quote ( $prevValue ) & ” to ” & Quote ( $thisFieldValue ) )

FileMaker’s ExecuteSQL() function supports only reading information with a SELECT statement. Maybe you could extend this a bit to ALSO write the information to another table at the same time by using the BE_FileMakerSQL function with the free BaseElements plugin from Goya:

https://goya.com.au/

The custom functions could continue to operate as-is with a single audit field serving as an internal reference for the “from” values when a field is modified. By simply adding a bit of instruction to this custom function, you could also write to another table every time a field modification is logged with something that looks like:

BE_FileMakerSQL ( “INSERT INTO MyAuditTable (FieldX,FieldY,FieldZ) VALUES (‘ValueX’,’ValueY’,’ValueZ’)” )

You can wrap everything with FileMaker’s GetAsText() command to get the readable values you’re expecting.

Awesome tips and pointers, Doug. I hadn’t taken advantage of the Base Elements plugin, and I definitely will now. Gives me a lot to look into and tinker with. I’ll be sure to share what I come up with on your forum as well. Cheers.

Hello, Community,

I have implemented this brilliant Audit log to my app and is working smooth,

When My self imports record which is done weekly, now it takes too long to import them, record by record,

Is therey anything we can do in order to improve the import process as before which was done very fast before implenting this audit log?

The import is also logged in the audit trail if you leave the box checked to “Perform auto-enter options while importing”. Clearing that checkbox will suppress the logging during import but may have other unintended effects if you’re using auto-enter and lookup options elsewhere.

You could also suppress the logging by setting a global variable before importing and modifying the auditing custom function to only evaluate when the global variable is not set.

Of course, both of these approaches require you to disable the auditing to improve performance.

Brilliant! I was reading the older version earlier today and only seeing this updated version now. For some reason my portal row changes are still not getting captured. Any idea what may cause this?

Forgive me, all I needed to do was create a new record after copying the functions and now it works great! This is simply amazing, thanks so much for sharing with the community!

Great! I was in the process of responding that the details are logged within the record where the changes were made. So changes to related records only show in the audit field on the related record and not the parent record. You clearly got past that point, since you’re now seeing the expected results.

Doug, thank you very much for this awesome auditing template!

I had some trouble with repetition fields, though (who doesn’t…). Your recursive logging function eX_Audit stops recursion when it encounters a repetition field. This makes logging incomplete:

– In your demo file, add a new field to Customers (named zzz). Create a new record and only enter text in the zzz field before committing the record. It will not be logged (in contrast to the same test with the name field).

– In your demo file, edit a record and modify one of the phone fields _and_ the new zzz field. The changes to zzz will not be logged, as well as any modifications to fields coming after phoneNumbers in the field list.

I changed the first recursive call from:

eX_Audit ( eX_GetRepetitionsList ( ~thisFieldName ; 1 ; ~repetitionCount ) ; auditFieldRef )

to:

List (

eX_Audit ( eX_GetRepetitionsList ( ~thisFieldName ; 1 ; ~repetitionCount ) ; auditFieldRef ) ;

Case ( ValueCount ( fieldList ) > 1 ; eX_Audit ( MiddleValues ( fieldList ; 2 ; ValueCount ( fieldList ) ) ; auditFieldRef ) )

)

which seems to fix the problem.

Tested with version 17.0.4 (Mac).

Best,

Max

Dear Doug,

thanks for sharnig such great solution with us, making audit log is now simple and fast. But I have some problem with speed of the database durig creation of new record.

After creation of new record database “stops” for 5 seconds (file is open localy!) to make this first log, after that step everything is working fine (each new change is saved very fast) – but during creation of new records it slows down (I did check it with script debbuger – it stops on new record create step). In The table which I create the records the are 25 text fields, and 4 summary fields, nothing else.

Does it have to do something with select SQL ?

Hope you give me some advice to solve this problem.

Hi Doug

Thanks for sharing with us such great solutions.

I did implement it to my solution, it works, but I have some problems with creating new records. In my Table are 20 text fields, 4 summary fields. After creating new record, database slows down for 5 seconds to create new record and first log, after that each change in this records works fine, the problem is only during creation. I already “dissable” summary fileds in first window open scritp, but that doesn’t help. Database is working localy ! When I upload it to server it works the same or even slower. Maybe you can give me some clues to make more efficient ?? Is it possible ??

Thanks in advance

Regards

Do you use repeating fields in your solution? If not, there may be a quick fix. You can try removing the text in the eX_Audit custom function that starts with “~repetitionCount =” and continues until the “]” character (leaving the bracket in place).

Hi Doug,

I didn’t use repeating fields. I did what you suggested and now is working fine and very quick. Thank you for the file and support : ) I really appreciate it.

Just to let you know, I needed also to delete line with “If ( ~repetitionCount > 1 and not ~isContainer ; ….” as I couldn’t t save the function.

Have a nice day. Regards

Hi Doug

it’s me again ; )

After those changes everything is working fine, but I have noticed another slowing down of my database.

Right now when I have “first” entries into audit field, every each change saves quick – exept if I make “replace field content”. Then “replace window” show records remianing to update which are 500 less than my total amount – sandglass shows up for around minute, then records remianing to update are 500 less than before and sandglass shows up again for another minute, it repeats till all my records are updated. This time I have placed the file on server.

Is there any chance to make quicker ??

Thanks in advance

Hi Doug

We are in process of modifying the Easy-Bake Audit Trail as a separate file.

An issue exists when trying to pass values from the Custom Function eX_Audit to the external Audit file

– any thoughts on how to “pass the values” is appreciated

My typical approach is to use the BE_FileMakerSQL function with the free BaseElements plugin to insert records into an audit log table. That could be added to the eX_Audit custom function where we’re setting the text to be displayed in the _audit field without much trouble.

The first change I make to a record records each field on the record as changed from “” to the new value. After that, it works fine. Can you help me to correct this activity on the first change to the record?

The process has to look in the _audit field to find the original contents of each field being audited. If you added the logging to an existing solution then it’s possible that the _audit field wasn’t initialized to show the original contents of each field at that time.

If this is the issue then you can turn off the “prohibit modification” setting on the _audit field, replace the contents of that field with an empty string which will trigger the initial audit on each record, then turn the “prohibit modification” setting on again.

Thanks Doug. It appears the entry of an empty space into the audit field triggers that same activity showing all fields change from “” to the new value. Struggling to find a means to avoid this, although not a deal breaker.

Is this only for records that were originally created before you added the audit function to your solution? You could just perform a find to isolate records where the audit field is empty and use the Replace Field Contents option to initialize the audit field on only those records.

Sincerely appreciate your responses Doug. I tried a few things.

I cleared all records and re-imported them with perform auto enter (the audit field already in the solution). This recorded all fields in each record as changing from [“” to New Value] in the _audit field. I think this is behaving as intended. The first touch to any record is recording all fields as being updated, any change past that is recorded correctly. Replacing field contents was tested and yields the same result. I can’t find any way to have this bypass this behavior on the first change to a record. I am happy to just trigger the initial and go from there, but see no way to clear the field contents.

Turning off the auto calculate functionality, clearing contents, then going back in still shows all fields as changing for the first time.

I’m interested in your suggestion to use the BE_FileMakerSQL function on an iPad running off of FMserver to put values in a table. Apparently the plugin does not work with FMGo. Any suggestions?

I’d probably stick with the current auditing method (without the plugin) as the first pass. You can then schedule a script on the server to:

1. Parse contents of the audit field on each record that’s not empty

2. Use the SQL function to create records in the separate table for each audit event

3. Clear the audit log on the record

You can use a global variable to suspend the auditing function while you’re running the script on the server, if necessary.

I have noticed that the record creation noticeably slower. Is there a way to prevent the _audit field from triggering whenever a record is created?

Hello Doug, Thank you again for sharing this. It is a great and easy way to create a change log!

I, too, had to delete the lines that had to do with repeating fields in the ex_Audit custom function (your message from April 29th at 12:08 pm; and thanks to Mariusz for the hint on April 30th at 12:56 pm). After removing this, it worked really fast. Very happy 🙂

Hi Doug,

Thanks for making this available to the community!

I just finished updating to this solution from your previous audit trail and I am having an issue with some of my excluded fields still being tracked in the _audit field. Specifically my auto-enter modification timestamps (local and host). I have confirmed that they are being stored correctly in the $$eX_AuditExclusions variable, which also list other fields that are being excluded properly. Any thoughts?

The list of excluded fields is checked using the FilterValues() function for only the field name without the table occurrence. I don’t see anything in the custom function that would allow auditing to occur when the field name exactly matches an entry in your return-delimited list of exclusions.

Any thoughts on using this in systems that connect to mySQL databases? The auto enter calc text field can’t be added to a mySQL table. Any thoughts on a workaround?

Auditing within a MySQL database should happen within the MySQL environment. This technique relies on FileMaker’s Get(ModifiedFields) function which can only identify fields within a FileMaker-native table.

I have been using this function for a while now and like it really much.

Is it possible to have this running in certain tables as a script trigger?

Kind regards,

Baran

This is a slick function, thank you for sharing it!

I’m looking to track only a single field within a database, is there an easier way to do that other than excluding all the other fields? The database has a lot of fields to list in the exclusion plus any time a new field is added, I’d need to remember to exclude it as well.

The auto-enter calculation in the _audit field controls when the auditing happens. The original calculation shows:

GetField ( "" ) & eX_TriggerAudit ( GetFieldName ( Self ) )Changing the GetField() to something that only evaluates based on the desired field would limit the scope. For example, in the demo file, changing that calculation to the following would cause it to only evaluate when the last name field is modified:

Case ( IsEmpty ( Left ( lastName ; 0 ) ) ; eX_TriggerAudit ( GetFieldName ( Self ) ) )Thanks, this is what I was looking for.

I will need to investigate and find a way, but my solution is a mix of SQL tables and FM tables. I only allow users to modify the customer’s info in our accounting apps, but it’s complicated as some people of the sales’s team never use that application and rely on someone else doing the modification… So I wanted to create an edit screen and log those changes because I know I will need to track who’s responsible for messing thing up 🙂

Since it’s not possible to have this field in FM, I added in my SQL table and set the auto-calculation. On what I understand for SQL data, FM query all the fields and returns all the data back, making the _audit fill with all fields mark as modified when in fact I only did it for one field.

Do you have a work around ? Suggestion ?

Thanks

And it doesn’t provide the previous value, it always return “” and the refresh once I commit the record is very long.

8/22/2022 11:28:48 AM: jff changed ?contact1? from “” to “test”

8/22/2022 11:28:48 AM: jff changed ?contact2? from “” to “test”

after a change

8/22/2022 11:30:17 AM: jff changed ?contact1? from “” to “test”

8/22/2022 11:30:17 AM: jff changed ?contact2? from “” to “test2”

My Bad… just saw the post on MySQL

Thanks! Very nice function.

Is it possible to make this work in a data separation model?

As of FileMaker 2023, it appears that Get(ModifiedFields) returns results when using Replace Field Contents. I’m guessing that this improvement was made to support the native audit logging features added in this version.

Thanks for the tip! Have you noticed a significant performance degradation as a result?

I tried implementing this in my solution. I have encountered a problem. Something in the custom functions is triggering referenced files to open, even for users who don’t need to access them and don’t have login credentials. Consequently, they are being shown the sign in dialog for a database they don’t have access to and are confused by. The layout they are on has no links to the referenced file and does not trigger the sign-in dialog. But, now that I’m trying to install this feature, they’re getting hung up. How can the CFs be modified to not trigger opening a referenced file? Is there something in the eSQL bit that is pulling fields from all files, even hidden ones? Can it be modified to look at only the current file open and the tables therein? Thanks!

Are you sure the login prompt is triggered by the audit trail? The custom function only references fields within the same table so there’s nothing inherent to the calculation that would trigger an external reference. I’ve seen a similar problem in setting unrelated to the audit trail which were resolved by recovering the database file. Maybe that will help in this case as well.

Hi, I drafted a full reply and then my browser lost it. I’ll try to rewrite. It definitely was that function. Turning it off fixed the behavior every time. What I did determine was that the relationship that was connecting the current table to the external table wasn’t actually needed, so I removed it. As soon as I did that and turned the audit back on, it no longer triggered the login for the other database file. But, I was still faced with a major lag problem. I’m not sure if it’s due to the user’s computers being slightly less powerful than mine, or the wide table, or lots of relationships. I’m working on improving a legacy system so the table is very wide, and has many connections on the relationship graph. Even with limiting the audit to 1 field, it’s still too slow to be useable for us. So then I thought perhaps it could be the eSQL triggering on the wrong context that’s causing it to slow?… I’m out of ideas.

I was very excited to see this solution. Simple, elegant and easy to understand and implement. I tested it out and it worked very well.

Then I implemented it in my production system. = DANGER! Two problems emerged. #1. When a new record was created, it somehow triggered summary fields to calculate and with thousands of records in the table, it tried to calculate thousands of records… painful. #2. Imports were also slowed down dramatically.

So, I have disabled it in all tables. That’s a shame… it seemed like a good technique.

Did the audit log capture the values of the summary fields, or did the system simply evaluate those fields without storing them in the audit log?

When importing records, do you want to skip the auditing? That could be added with a global variable that tells the custom function not to look for changes.

Is there an easy way to have this audit only 2 fields without having to add each exclusive into the startup script manually?

Yes, this can be done easily. This tip file relies on all fields reported by the Get(ModifiedFields) function within the custom function named “eX_TriggerAudit”. You could filter that list using the FilterValues() function to include only those two field names when handing off to the “eX_Audit” custom function.